Meta Learning

We are investigating new algorithms and applications for meta learning using neural processes, e.g. new aggregation methods or more efficient variational inference approaches. In terms of applications, we explore meta learning for learning dynamical systems as well as for vision regression tasks such as pose estimation.

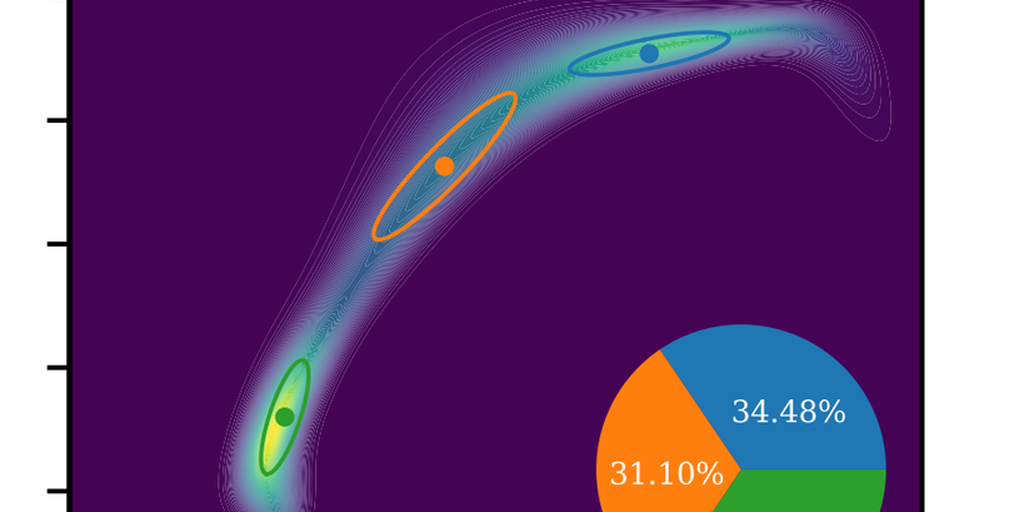

The Neural Process (NP) is a prominent deep neural network-based BML architecture, which has shown remarkable results in recent years. Prior work studies a range of architectural modifications to boost performance, such as attentive computation paths or improved context aggregation schemes, while the influence of the VI scheme remains under-explored. GMM-NP does not require complex architectural modifications, resulting in a powerful, yet conceptually simple BML model, which outperforms the state of the art on a range of challenging experiments, highlighting its applicability to settings where data is scarce.

more

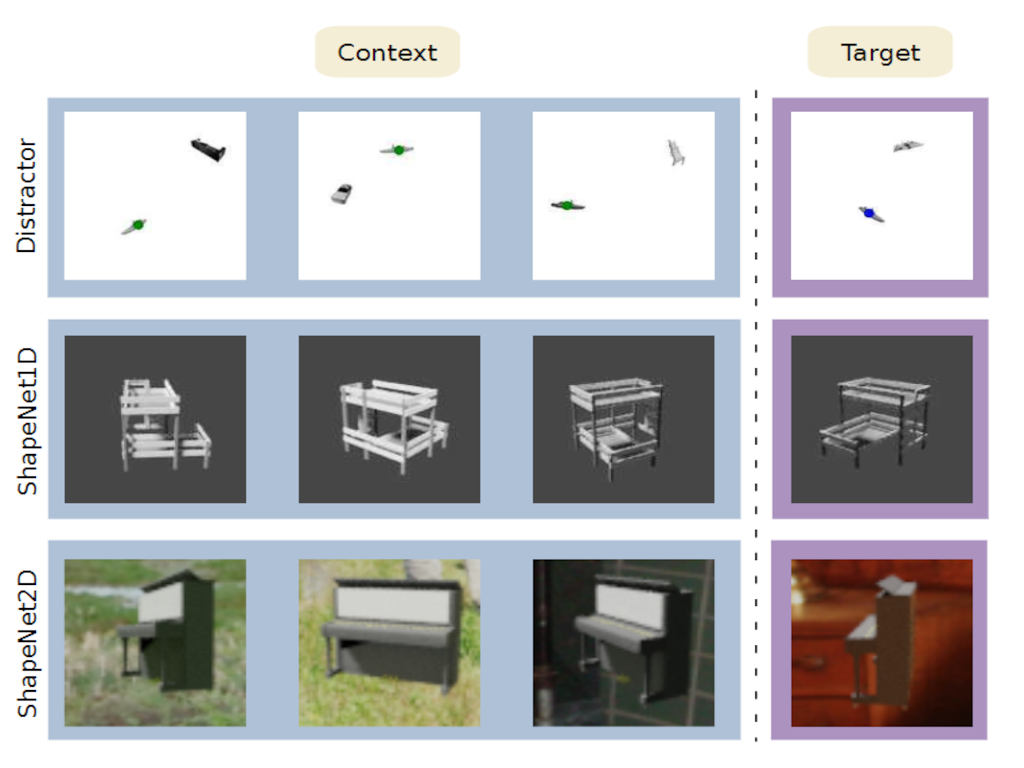

We design two new types of cross-category level vision regression tasks, namely object discovery and pose estimation, which are of unprecedented complexity in the meta-learning domain for computer vision with exhaustively evaluation of common meta-learning techniques to strengthen the generalization capability. Furthermore, we propose functional contrastive learning (FCL) over the task representations in Conditional Neural Processes (CNPs) and train in an end-to-end fashion.

more

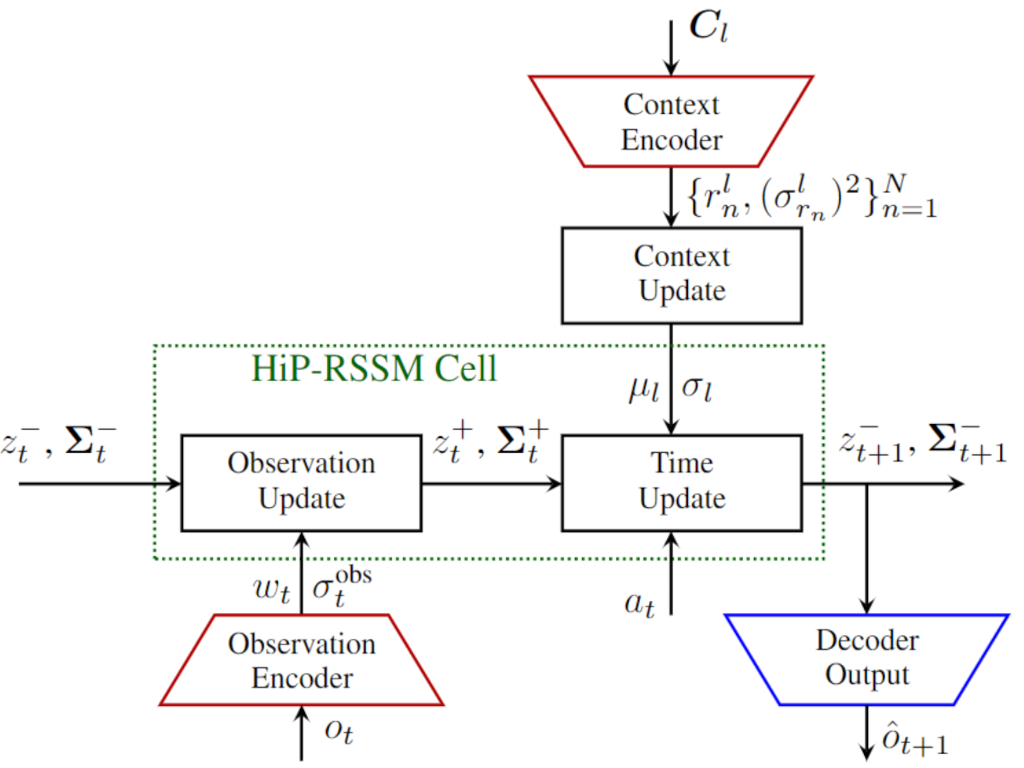

We propose a multi-task deep Kalman model, that can adapt to changing dynamics and environments. The model gives state of the art performance on several robotic benchmarks with non-stationarity with little computational overhead!!!

more

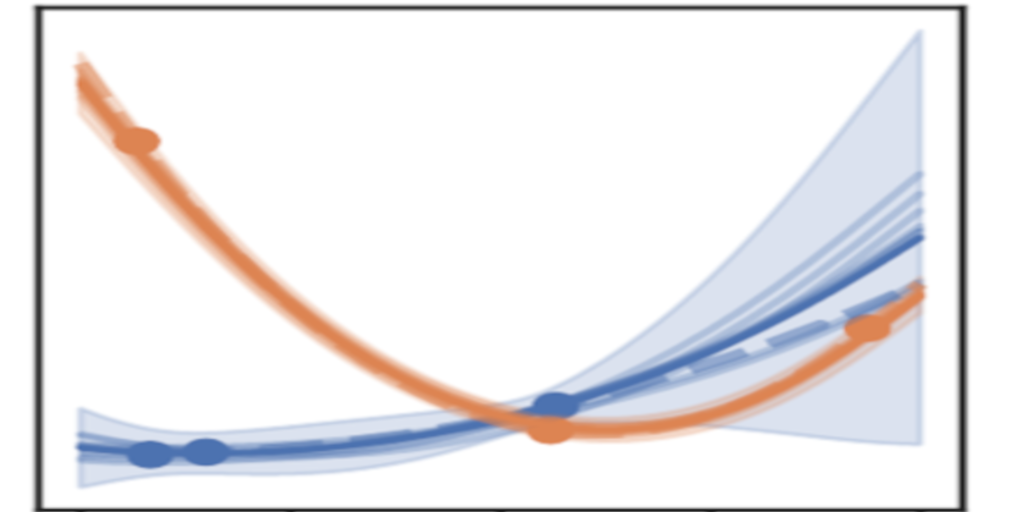

Neural Processes are powerful tools for probabilistic meta-learning. Yet, they use rather basic aggregation methods, i.e. using a mean aggregator for the context, which does not give consistent uncertainty estimates and leads to poor prediction performance. Aggregating in a Bayesian way using Gaussian conditioning does a much better job !:)