Variational Inference

We are investigating new Variational Inference (VI) methods which use GMMs to accurately approximate arbitrary target distributions. Our algorithms are based on natural gradients, variational lower bound decomposition as well as information theoretic trust regions.

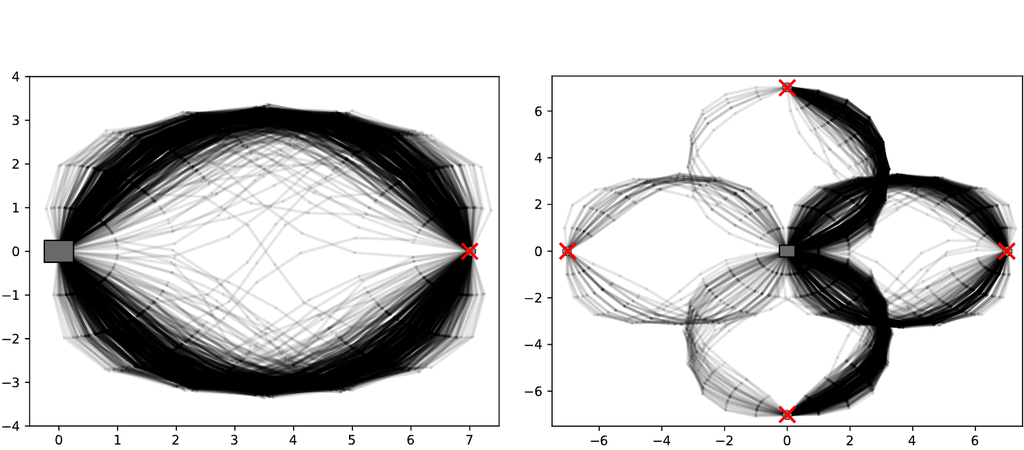

Variational inference with Gaussian mixture models (GMMs) enables learning of highly tractable yet multi-modal approximations of intractable target distributions with up to a few hundred dimensions. The two currently most eff ective methods for GMM-based variational inference, VIPS and iBayes-GMM, both employ independent natural gradient updates for the individual components and their weights. We identify several design choices that distinguish both approaches and test all possible combinations. We identify a new combination of algorithmic choices that yield more accurate solutions with less updates then previous methods.

more

Many methods for machine learning rely on approximate inference from intractable probability distributions. Learning sufficiently accurate approximations requires a rich model family and careful exploration of the relevant modes of the target distribution...

more